MVP is not a Thing

Breaking up learning opportunities into smaller experiments can help gain deep understanding of the fundamental elements of a product and what will make them succeed (or fail) together.

When it comes to the concept of a minimum viable product (MVP), it's important to understand that there are many different definitions of MVP. Some people see it as a product with the minimum number of features necessary to be useful to customers. Others view it as a proof of concept that can be used to test a business idea or gather feedback from potential users. Both of these definitions have elements of truth but don’t paint a full picture. An MVP should be defined by its ability to help a software development team learn something important that will feed back into the product development process.

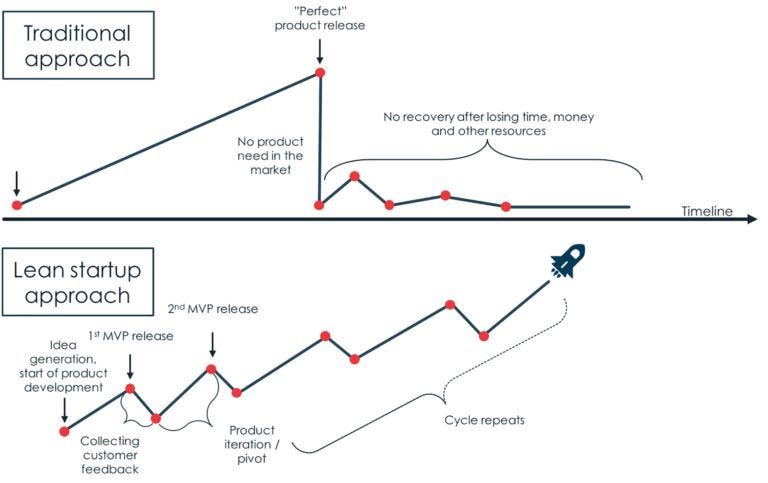

In order to use an MVP effectively, you need to have hypotheses and assumptions because if nothing else, they are opportunities to validate or invalidate key beliefs that underpin a product idea. MVP is a step, not a “thing.”

MVP should be defined by its ability to help a team learn something important that improves the information they have to make decisions.

For example, let's say you're developing a new social media app. Your feature hypothesis might be that users will find the app engaging. Your outcome hypothesis might be that the app will generate a certain number of daily active users within the first six months of launch. Your learning hypothesis might be that the app's user interface is intuitive and easy to use. And your assumptions might include the belief that users will be willing to share their personal information in order to connect with others on the app.

Based on what you need to learn, you can then begin to look at how to design experiments that will give you the data to determine whether or not your assumptions are correct. You don’t need to build “MVP” features for all of it. In fact, it’s likely much less risky to first validate your outcome hypothesis to become certain that your business drivers work. If you validate outcome viability at a ground level, pick another atomic assumption to validate.

Breaking up your hypotheses into smaller experiments can feel counter-intuitive because sometimes what you test is nothing like the product you intend to build, but in reality you gain deep understanding of the fundamental elements of your product and what you need to do to ensure they succeed. The biggest risk in this approach is figuring out the right way to combine the atomic pieces together into a product. But by that time you know the minimum necessary to make it all work.

What part of this process is the “MVP”? The real question is, if you learned something useful from each, does it matter?