Against Being "Data-Driven"

Data is a compass, not GPS

My first job out of college was at an advertising agency in Chicago. During my first or second year there, one word seemed to be in every campaign: “Pure.”

On my daily commute, I saw it plastered across billboards and in stores: “Pure Flavor” for Starbucks coffee, “Absolutely Pure” cocoa essence from Cadbury, “Pure Juniper” for gin.

It was the go-to buzzword for every brand. But the more I saw it, the less it seemed to mean anything at all. If everything was pure, then pure was just a gimmick—something brands said because they thought they were supposed to. Apparently it resonated with customers, but meaningless at its core.

When Everything Is “Pure,” Nothing Is

This is exactly how I feel now when I hear a company say they’re “data-driven.”

On it’s face, it resonates. Who wouldn’t want to have more information and be able to make decisions based on better facts and numbers? But I’ll be honest—it makes me cringe.

When everyone is data-driven, it stops being as useful. And when taken too far, being “data-driven” can actually make teams worse at decision-making. Most companies that claim to be data-driven are great at collecting data, but not great at making it useful.

Why is this a problem? I’ve seen first hand that when you have all the data it has a tendency to replace human judgment, instead of supporting it. When that happens, teams stop thinking. They mistake correlation for causation or get paralyzed when they don’t have complete information sets.

Data should guide decision-making. It should never replace it.

The Problem With Being “Data-Driven”

A dogmatic data-driven approach assumes that if you just track the right numbers, the right answers will emerge. But decisions are rarely that simple.

Here’s where it fails:

The most measurable things aren’t always the most meaningful.

Conversion rates, DAUs, NPS scores—they’re easy to track. But the most important parts of product success are often harder to measure: Are we solving a real problem? Are we improving how customers feel about our product? Are we playing a good long game?

Data only tells you what is happening—not why.

Imagine a feature that users don’t engage with. The data says, “No one is clicking this.” What does that actually mean?

Do users not understand it?

Do they not need it?

Is it buried in the UI?

Is it valuable but just used in a different way than expected?

If you only prioritize what’s easy to measure, you risk making decisions that look good on a dashboard but don’t actually improve the experience. The data can’t tell you. But a conversation might

Optimization and efficiency kill innovation.

If you let data drive every decision, you’ll always do more of what’s already working. That sounds smart—until you realize it means you’ll never take a big swing.

Every breakthrough product starts as a bet. And most bets don’t have clean, quantifiable justifications upfront. If they did, someone else would have already built them. A/B tests and analytics help you refine an idea. They don’t help you find the next big one.

What to Be Instead: Data-Informed, Not Data-Driven

I’m not saying you should ignore data. I’m saying don’t allow data to become a gimmick. Teams that win aren’t data-driven. They’re data-informed.

They use data to validate and challenge intuition, not replace it. They treat dashboards as starting points for conversations, not as decision-makers. They remember that data is a tool, not a verdict.

Being data-informed means understanding that numbers don’t think. People do.

How to Use Data Without Letting It Use You

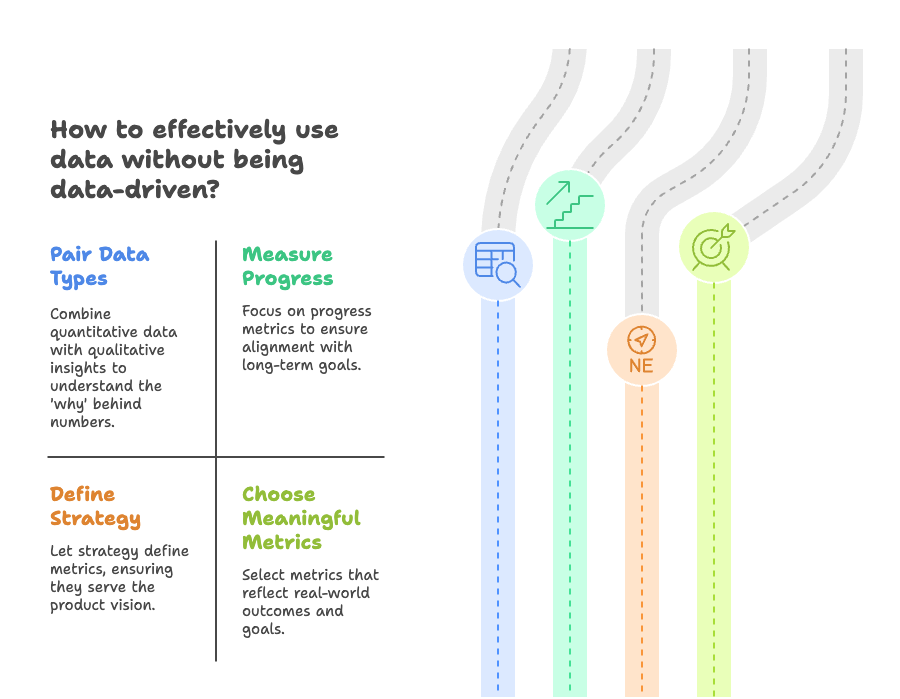

If you want to avoid the pitfalls of being data-driven, but still use all that data you’ve accumulated, here are a few ways to shift your approach:

Pair Quantitative Data with Qualitative Insights: Numbers tell you what is happening. Talking to customers tells you why. For every KPI you track, ask “what customer behaviors drive this number?” “what unmeasured factors might influence it?” “Do qualitative insights confirm or contradict what the data suggests?” If you don’t know why a number is moving, you don’t really understand it.

Measure Progress, Not Just Performance: Performance metrics (like conversion rates) tell you how well your system is working right now. Progress metrics (like engagement over time) tell you if you’re actually moving toward your goal. High performance on the wrong thing isn’t success, it’s just being efficient at the wrong work.

Don’t Let Metrics Define Strategy: Many teams set goals based on what’s easy to measure. “Increase NPS by 10 points.” “Improve conversion rate by 5%.” But metrics should follow strategy, not define it. An approach I’ve used:

First, start with a product vision. Before looking at any numbers, ask: What is the product trying to achieve? Are you trying to reduce friction for customers? Do you want to drive long-term engagement? Are you aiming to make a specific task effortless? Your vision should be qualitative and directional, not just a collection of performance indicators. Metrics should serve this vision—not the other way around.

Next, define what success looks like in human terms: Success is more than a number on a dashboard. In this step, translate your product vision into something tangible and meaningful. Ask, what does a successful user experience feel like? How do you know when you’ve truly solved a problem? What should customers be able to do more easily than before?

For example, instead of saying “We want a higher NPS,” ask “What would make customers recommend us without hesitation?”

Instead of “We want to increase conversion rates,” ask “How do we ensure customers feel confident completing this action?”

Again, metrics emerge as a way to track progress toward these real-world outcomes not as the goal itself.

Finally, choose metrics that indicate progress. Once you have a vision and a human-centered definition of success, then—and only then—should you choose metrics. Pick metrics that reflect the underlying goal, not just what’s easy to measure.

If the goal is habit formation, track repeat engagement rather than one-time sign-ups.

If the goal is trust, track resolution rate of customer issues rather than just satisfaction scores.

Recognize that no single metric tells the full story. Pair quantitative data (e.g., activation rate) with qualitative insights (e.g., user interviews). Monitor unintended consequences—if conversion rates go up but long-term retention drops, you might be optimizing the wrong thing.

By taking this approach, you set your team up to work toward meaningful outcomes, not just chase numbers.

Data is a Mirror, Not a Map

At its best, data helps teams see reality more clearly. At its worst, it narrows vision instead of expanding it.

If you let numbers drive your decisions, you’ll optimize yourself into a corner—faster and faster, but never toward anything new.

If you use data to inform judgment, challenge assumptions, and guide exploration, you’ll make better decisions than any dashboard ever could.

So before you call yourself a data-driven team, ask yourself:

Are you making decisions? Or is the data making them for you?